BGSU education researchers undertake a 5-year project to revamp mathematics word problem assessments for students via computer adaptations

Estimated Reading Time:

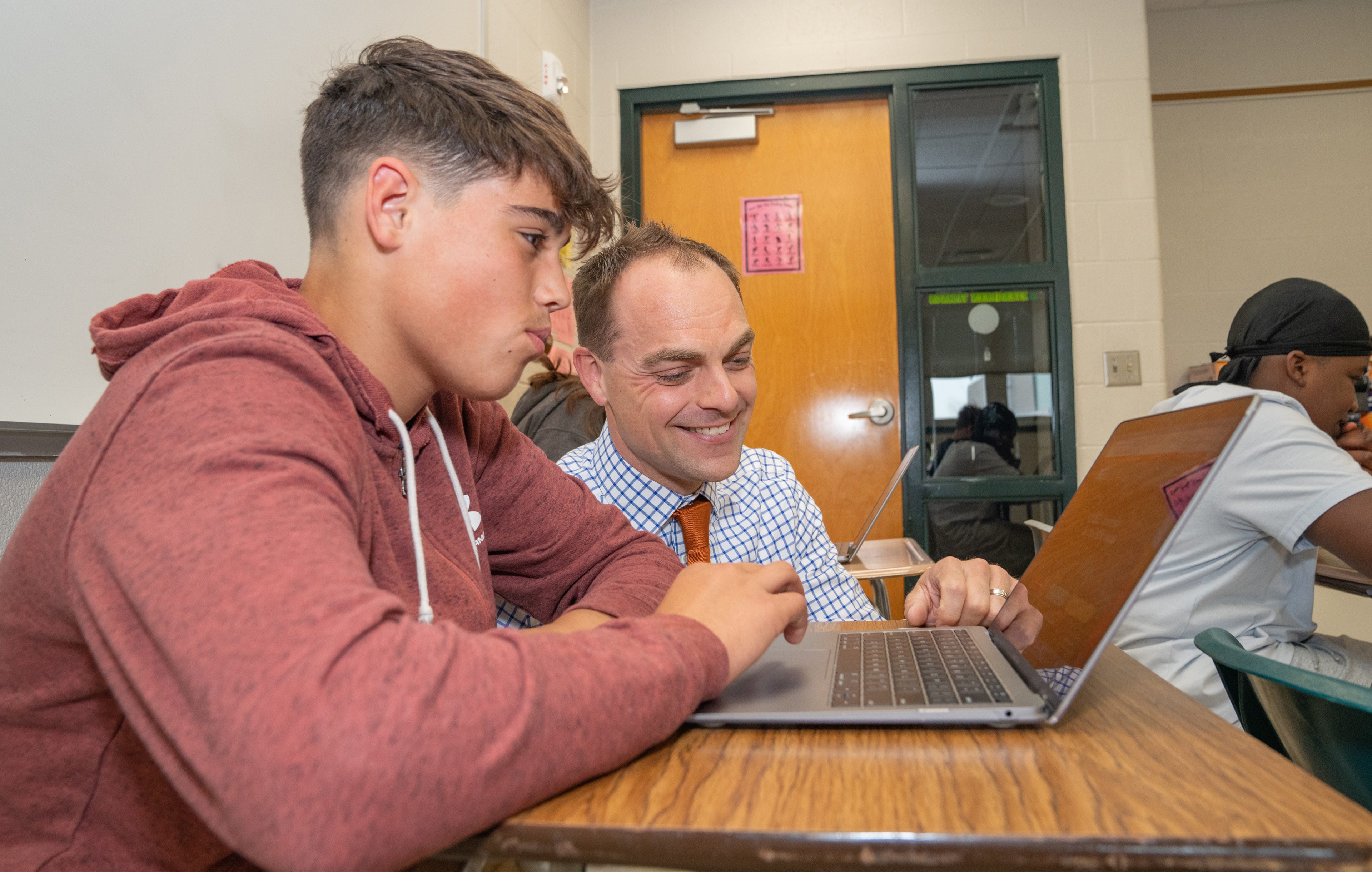

College of Education and Human Development faculty Dr. Jonathan Bostic and Dr. Gabriel Matney are using a nearly $927K NSF grant to study word-problem assessment in mathematics

If 28 students are tasked with solving which will arrive first between Freighter 1, which is traveling 12 knots per hour and halfway through its journey 10 hours after departing its port of origin, and Freighter 2, which is 35% of the way to its destination but traveling 15 knots per hour, how many students will experience a language roadblock that will prevent them from engaging with the word problem?

The answer likely is more than a few — but two Bowling Green State University researchers are undertaking a five-year research project to create a better, more efficient form of mathematics word problem assessment for students.

Dr. Jonathan Bostic and Dr. Gabriel Matney, both professors in the BGSU School of Inclusive Teacher Education in the College of Education and Human Development, are using a National Science Foundation grant of nearly $927,000 to study and advance the use of computer adaptive assessment within mathematics education.

The project, called DEAP-CAT, collaborates with researchers at other universities and across multiple states, including Ohio, to use science-based methodology to develop a better way to test students' problem-solving capabilities.

In working with partner districts, Bostic and Matney often found stakeholders asking for better, shorter mathematics tests — the main goal of the DEAP-CAT project.

“We were trying to respond to our clients, which is our communities,” Bostic said. “This is what teachers, administrators, students and families want, and also maintains what we believe is important: Better tests, more useful information from those tests, less time spent on testing and more time spent helping others learn and grow.”

“Whenever you’re encountering a problem and there’s something that you can’t make sense of, either via your experience or via your imagination, you will have more difficulty entering the problem in order to do the mathematics — and that’s not the point of a mathematics word problem." - BGSU professor Dr. Gabriel Matney

Researchers recently concluded the second full year of the project and have created and validated more than 540 mathematics word problems that are realistic, complex, meet state standards, and can be solved in multiple ways.

“We frame the problem in a way that when a student reads it, they have to stop and think,” Bostic said. “That’s critical thinking because it’s not immediately solvable. But it has to be open: there are a minimum of two but usually three to five different ways that a student in that grade level could solve it, so that means many entry points where any learner can have a chance at it.”

A better way

At one point or another, almost everyone can recall being lost in a mathematics word problem.

While the questions aim to apply mathematics to the real world, they may often succumb to a number of pitfalls: unrealistic premises, jargon, overly complex language, incorrect assumptions or confusing diction.

When students can’t find entry points into the problem, Matney said, it decreases the chances they will actually complete the problem they are being asked to solve.

“Whenever you’re encountering a problem and there’s something that you can’t make sense of, either via your experience or via your imagination, you will have more difficulty entering the problem in order to do the mathematics — and that’s not the point of a mathematics word problem,” Matney said. “The mathematics word problem should allow you to enter the context and utilize mathematics to solve the problem, whatever it might be.”

One of the core beliefs of Bostic and Matney’s research is that all of the questions should be realistic and able to be understood.

In other words, the questions don’t have premises about jumping from train to train, completing unusual tasks or buying outrageous amounts of things because those contexts often hinder students from actually solving the problem.

“We don’t want students to encounter unrealistic things like you see in word problems that put you in a scenario where you have to carry 76 watermelons out of a grocery store, for some reason,” Matney said. “We want these problems to be imagined in their purview.”

Many word problems can become confusing due to “assumed lived experiences” — something the question writer assumes everyone knows, but might be lost to someone who is unfamiliar.

The use of “power play” in a word problem about a hockey game, for instance, might be obvious to sports fans, but for a student who might have never seen a hockey game, the phrase can cause a hangup that prevents them from solving the problem at hand.

Further, researchers developed all of their questions to be at or below the student’s reading grade level. Because the tests are a measure of a student’s mathematics problem-solving ability, Matney said students should be able to comprehend what the question is asking them to do.

“We don’t want reading to be a huge factor in whether or not the student demonstrates their proficiency in mathematical problem solving,” Matney said. “We need to make sure that the item’s readability is lower than their grade level to make sure the student can definitely enter the problem based on their age and reading level.”

One of the great advantages of developing computer adaptive assessments is their ability to be tailored to each student individually.

When compared to static tests — in which every student is given the same assessment with questions in the same order — computer-adaptive testing can respond to each answer in real time. The assessment then scales questions, all of which can be solved in multiple ways, based upon how students answered previous questions.

The process can quickly and efficiently gauge a student’s ability in relation to their grade level in as few as five questions, allowing for shorter tests and more instruction time.

“We want to give teachers the data they want and need to make decisions about their instruction,” Bostic said. “We believe in more time teaching, more time for instruction and less time assessing, so we wanted to do assessing in shorter times but with more data. We have the personpower and the team to do this right.”

.jpg)

The path forward

Bostic and Matney’s research is increasingly relevant in the modern workforce, which often asks skilled workers in various fields to use mathematics to solve real-world problems.

Many professionals are asked to quantify a situation and devise an effective solution, which is exactly what contextual mathematics word problems aim to do.

“One of the things students need for the work that they’re going to be doing is to be able to make sense of issues at hand and try to find solution pathways that will work,” Matney said. “After they’ve found pathways that work, asking which one is the most efficient is one that we encounter in many jobs that we do today — much more so than in the past.”

By developing methods that can quickly and efficiently measure a student’s ability, teachers have a key starting point. When they know a student’s strengths, Bostic said, that is powerful information.

“Is the test going to be a panacea or a silver bullet that solves everything? No. But is it going to help people? We think so,” he said.

The project, which runs through July 2026, aims to find better ways to test students and engage the students taking those tests.

With better questions can come better student engagement, Bostic said, which is one of the core goals of the project.

“We believe that when students feel a connection, they’re more engaged, and that’s important,” Bostic said. “Engagement in STEM is critical and we need more people to be engaged in STEM fields, and better tests are a way we can do it.”

Related Stories

Media Contact | Michael Bratton | mbratto@bgsu.edu | 419-372-6349

Updated: 09/14/2023 08:28AM